Touch:See:Hear

Touch:See:Hear is a multi-sensory, interactive, digital art installation for adults with learning disabilities.

This artistic R&D postdoc project was developed in collaboration with the LEVEL Centre – a custom-designed multimedia arts space in Rowsley, Derbyshire, UK. Close to 200 users a week benefit from its facilities and programming which aims to promote their unique creativity, develop their individual artistic language and challenge perceptions of learning disabled culture.

The project began in February 2016 and ran ’til December 2016 – initially supported by a 12-week, full-time, AHRC Cultural Exchange Fellowship via the Manchester Institute for Research & Innovation in Art & Design (MIRIAD), Manchester School of Art, Manchester Met; subsequently extended to 6 months via additional AHRC funding; and in the later stages, part-time via support from LEVEL.

While LEVEL has a wealth of experience and expertise in ‘guided’ creative activities, they also have a remit to develop digital tools that enable users to connect with their environment through ‘autonomous’ multimedia and creative technologies. Accordingly, they initiated the ‘Inter-ACT + Re-ACT’ programme – simple, interactive installations (e.g. a digital ‘hall of mirrors’) that engage people through their journey around the building. No instructions are given and staff just observe the level of reaction and engagement to try and work out who does what and why.

The LEVEL Centre has a multimedia space – a 7mx7m ‘white cube’ with projection on each wall, four wall mounted speakers, a basic theatre lighting rig and wireless/wired data services. It was mainly used as a performing arts studio space and ‘screening’ room – usually projecting video on one wall though occasionally for more ambitious multiscreen projections – such as their biannual PURPLE PEACH club style events for users, their carers and friends.

I thought this project could help LEVEL make more of this somewhat underused resource – and through it respond to key questions raised through the ‘Inter-ACT + Re-ACT’ programme – “How might learning disabled adults engage with playful multi-media and multi-sensory environments embedded into the fabric of the building?” and “What unique benefits might this type of activity realise?”. So I suggested prototyping a technical infrastructure that would realise an immersive, multi-screen audiovisual environment with spatialised audio, responsive lighting and a custom-designed control and tracking system. More than just an immersive experience, it aimed to be a distinct artwork with a purpose – a tool for individual and group audiovisual composition.

The project had several relatively complex technical aspects – but in overview realised:

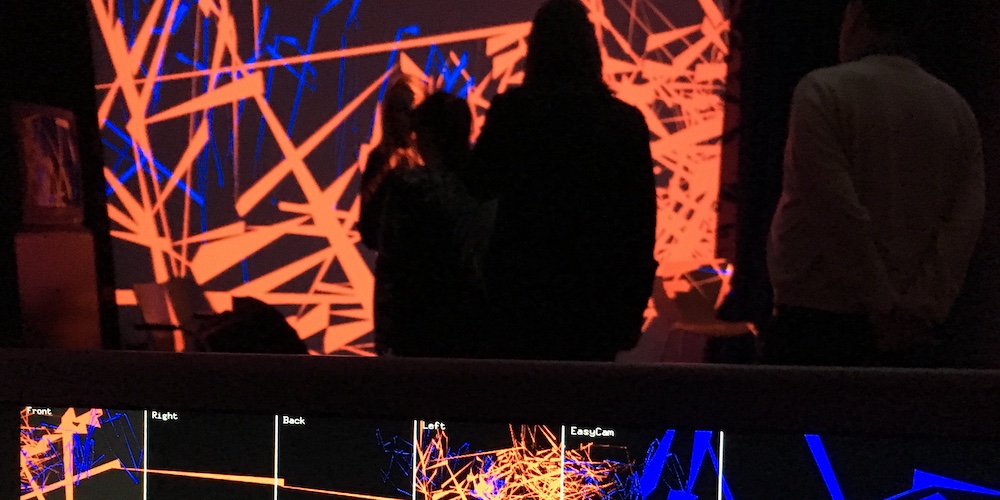

- a flexible but robust four-screen projection and monitoring system – developed in the creative C++ toolkit, openFrameworks (oF) v0.9.2 and run on a 3.5 GHz 6-Core Intel Xeon E5, 32GB RAM Mac Pro (Late 2013);

- spatialised audio using LEVEL’s in-house four-speaker audio system and an Edirol FA-101 multi-channel audio interface – initially via the ofxFmodSoundPlayer2 and ofxMultiDeviceSoundPlayer oF addons and later via MIDI adjustment of individual channel Sends in Ableton Live;

- DMX control of up to six Equinox Fusion 150 moving heads;

- and intuitive, custom-designed, hand-held, wireless controllers for up to four users – integrating Posyx, a hardware/software system for tracking motion and position in real-time. This last element – technical development with Posyx – became a significant aspect of the project, exploring the potential of this system (designed primarily for tracking items in warehouse settings) but for creative use.

Each Posyx ‘tag’ – essentially an Arduino Shield – has an on-board microcontroller, UWB (Ultra Wide Band) transceiver and sensor array of an accelerometer, gyrometer, magnetometer and pressure sensor. Once powered a tag automatically transmits the data from its sensors wirelessly every 15ms or so as a string of values (28 in all). Tags have their own unique ID but are also multifunctional – they can be either a transmitter, receiver or anchor. Motion tracking requires a minimum of two tags – one as a transmitter and a second as a receiver fitted on top of an Arduino Uno which outputs the data received from the transmitter tag (or tags) to the computer via USB. Position tracking requires a minimum of 5 tags (for 2D) and up to 8 tags (for 3D) – a transmitter, receiver and anchors – the latter mounted at different heights and known positions around the 4 walls of the room. Once configured, the transmitter uses these anchors to work out its position within the room using a process similar to triangulation (with up to 10cm accuracy in the XY-axes though I found data in the Z-axis (height) less robust) and then forwards this data onto the receiver along with its motion data.

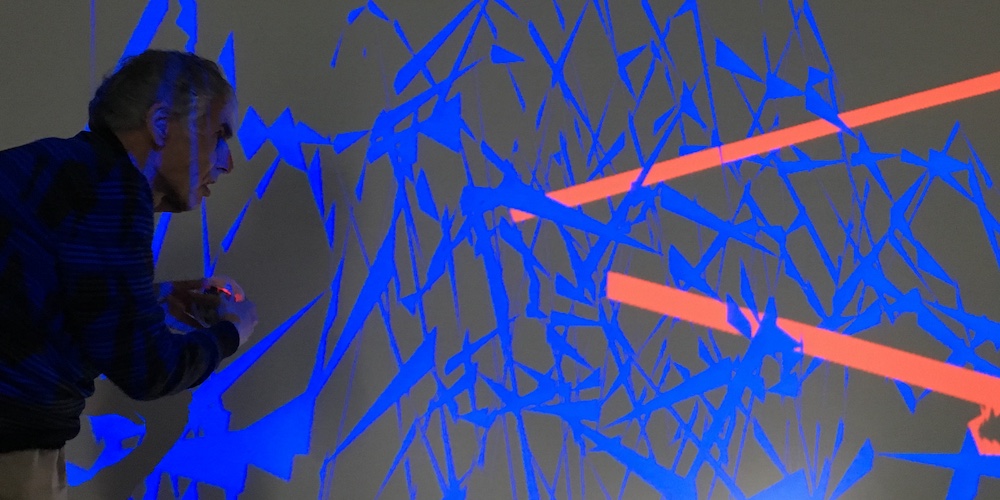

Experimenting with the system I found that I was able to not only send the Posyx tag data wirelessly to a computer – but also simultaneously capture it ‘in situ’ to another microcontroller in the hand-held device. This meant I could add additional inputs – a distance sensor and capacitive buttons – as well as outputs – a compact transducer, vibration motors and LEDs – so that the device could also make noises, vibrate and light up in different ways – and in response to how it was being used.

Creative development (with input from collaborator Ben Lycett) realised a number of outputs:

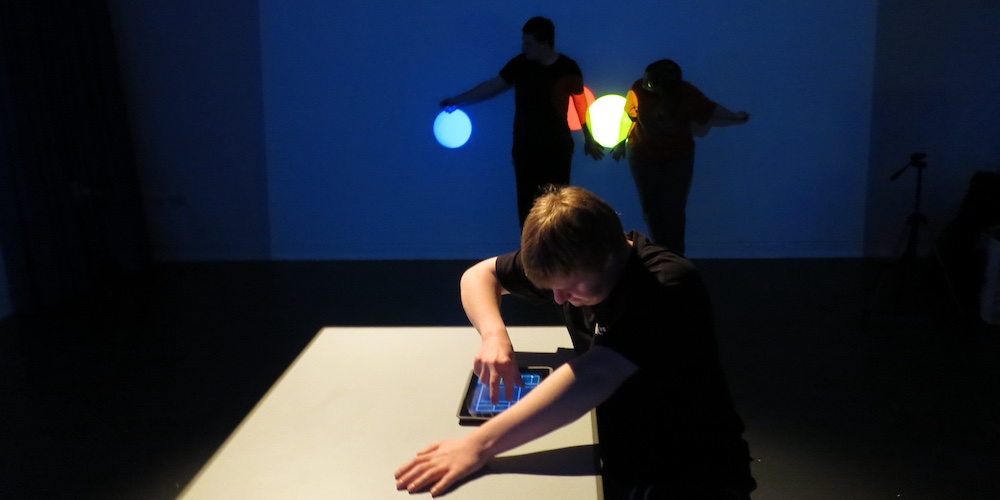

- a series of initial test oF projects using simple shapes and bold primary colours to explore alternative relationships between the screens – as either independent of each other, an extended desktop, ‘windows’ on to a 3D virtual environment or ‘mirrors’ of activity within the physical space – triggered via various TouchOSC interfaces on an iPad;

- a series of tests oF projects that explored use of panoramic images and video;

- an adaptation of the oF cameraRibbonExample project – multiple ribbons displayed across all four screens (as ‘windows’ into a 3D virtual environment), ‘drawn’ via motion tracking data from the Posyx tag in the hand-held controllers and with additional spatialised sound via Posyx tag data converted to MIDI and triggering various softsynth VSTs in Ableton Live;

- an adaption of earlier PhD development exploring the Superformula – a generalisation of the superellipse proposed by Johan Gielis (circa 2000) which he suggests can be used to describe many complex shapes and curves found in nature – to create an ‘underwater’ environment of diatoms – the all-pervasive microalgae found in the world’s oceans, waterways and soils and that have a remarkable variety of shapes. Positional data from a Posyx tag was overlaid on to a 2D control grid and adjusted different variables within the Superformula – changing the shape and size of a virtual diatom (seen from each of its sides on the four screens) as you moved around the space. The 2D grid also controlled the audio output – different locations in the room highlighting different sonic elements while movement immediately around a position adjusted notes in the scale.

The project also helped me to think about and respond to the unique challenges of developing work in this context – particularly my underlying assumptions and thinking around the nature of interactivity, agency, representation and attention within an immersive, interactive environment. How should work of this nature respond to a group of users with differing needs, who don’t sequence in the same way as able-bodied and have differing abilities in tracking multiple objects with varying movement & speed? Considering this ‘difference’ required an alternative approach to the design process. What emerged was the notion of ‘Designing for Difference’ – a more considered and reflective approach around the themes of the senses, assistive technologies and digital creativity.

There’s an (incomplete) online journal documenting the emerging and iterative design process.

A demo video including an overview of the custom-made ‘sensor ball’ controller and a slideshow and footage of LEVEL staff and users testing the system is embedded below.